How Interactive AI Avatars Adapt in Real Time for Immersive Experiences

Key Takeaways

Interactive AI avatars enable real-time, personalized, and lifelike interactions that go far beyond static visuals or pre-recorded content. By combining seamless voice synchronization, expressive gestures, and access to relevant knowledge sources, they create conversations that feel both natural and responsive. With D-ID’s technology, developers can quickly build and customize these avatars to match brand tone, integrate them into websites, apps, or virtual platforms, and deliver multilingual, context-aware experiences that engage users on a deeper level.

What Are Interactive AI Avatars?

Interactive AI avatars are digital characters that can engage in live conversations with users. These avatars combine speech recognition, natural language processing, and visual rendering to simulate human-like presence across websites, mobile apps, or virtual platforms. Unlike static avatars or pre-recorded videos, interactive avatars respond to questions, adapt based on context, and convey emotion through synchronized facial movement and gestures.

At their core, interactive AI avatars act as real-time digital presenters or assistants. They are frequently deployed as front-line interfaces for customer service, onboarding, education, or product demos. By combining generative AI models with real-time rendering and synthetic speech, they deliver dynamic avatar experiences that feel more natural and less scripted.

Their effectiveness stems from their ability to connect with users on both verbal and nonverbal levels. They listen, process, and respond (all within milliseconds) making interactions more immediate and human-centered.

How Real-Time AI Avatar Technology Works

Creating a responsive avatar experience requires multiple systems working together in near-perfect sync. Real-time AI avatars depend on several core technologies to make the interaction feel believable:

1. Speech Recognition and Language Understanding

When a user speaks or types, the avatar platform uses automatic speech recognition (ASR) or text inputs to capture intent. These inputs are processed by large language models (LLMs) or retrieval-augmented generation (RAG) systems to generate a coherent response.

2. Live Rendering and Animation

Once a response is generated, the avatar’s face and body must animate in real time. This includes lip-sync, blinking, head movements, and micro-expressions that match the emotional tone of the response. D-ID’s real-time video synthesis engine, for example, turns text into lifelike video using a still image as the base.

3. Real-Time Voice Sync

A synthetic voice reads the generated response out loud. This voice can be selected based on gender, accent, tone, or language. High-fidelity voice models ensure that the pacing and tone feel natural and authentic. Lip movement is aligned to the voice at a frame level to maintain realism.

4. Knowledge Integration

Some avatars connect to product manuals, internal documentation, or CRM data to answer specific queries. This enables domain-specific, knowledge-driven interaction that goes beyond generic chatbot responses.

5. API and Interface Integration

The real-time AI avatar is embedded into the user interface through API calls or SDKs. It can be deployed on web pages, mobile apps, kiosks, or VR environments. Developers often use webhooks or event triggers to connect avatar actions with user behavior.

Together, these technologies form the foundation of real-time, adaptive avatar systems. Whether they serve as virtual sales reps, HR agents, or e-learning instructors, interactive avatars bring real-time intelligence to the forefront of digital communication.

Benefits of Dynamic AI Avatars for Developers and Enterprises

Interactive avatars provide value at every stage of product development and customer engagement. They are particularly valuable for teams building AI agents or customer-facing applications that require a human element.

Here’s what developers and enterprises can gain from real-time avatar solutions:

Faster Deployment for Conversational Interfaces

D-ID’s platform allows developers to launch interactive avatars without extensive 3D modeling or motion capture. This saves weeks of development time and lowers the entry barrier for integrating AI into user-facing tools.

High Engagement and Conversion Rates

Users spend more time on websites or apps that include a face-to-face element. Avatars hold attention better than plain text or audio and make users more likely to take action like signing up, exploring features, or making a purchase.

Easy Personalization Across Use Cases

Developers can tailor avatars based on industry, region, or brand tone. Custom scripts, voice styles, and knowledge sources enable a high degree of personalization. One avatar can be customized to serve different audiences by simply updating its configuration.

Flexible Integration for Any Stack

With support for REST APIs, WebRTC, and JavaScript SDKs, developers can plug avatars into almost any digital experience. Whether the interface is a customer portal, healthcare assistant, or B2B sales tool, avatars add an engaging layer of interaction.

Multilingual Capabilities

Enterprises operating in global markets need content localized in multiple languages. D-ID’s avatars support real-time language switching and auto-translated responses, helping businesses reach broader audiences without needing to build new tools from scratch.

Improved Accessibility and Inclusivity

Interactive avatars can include subtitles, alternative voice outputs, and simplified language modes to make content accessible to a wider range of users. This is especially important for compliance in regulated industries or educational programs.

In short, real-time AI avatars help developers build more intuitive, immersive interfaces, without sacrificing speed or scalability.

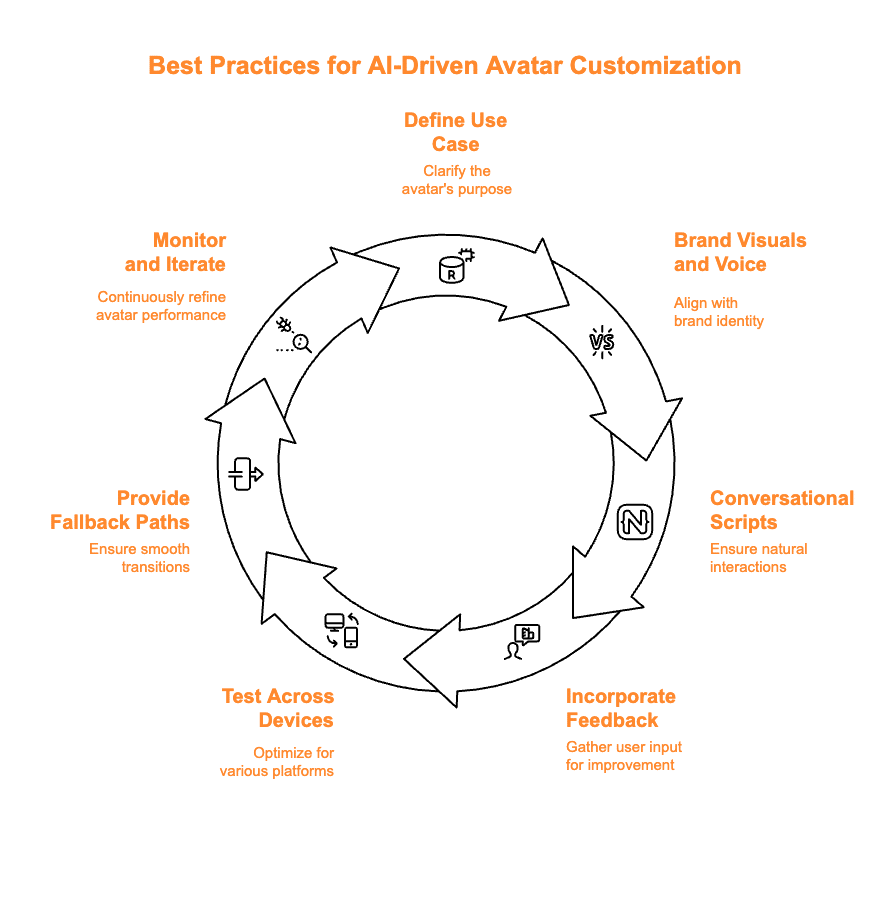

Best Practices for AI-Driven Avatar Customization

Building an interactive avatar is just the beginning. To create truly immersive and impactful experiences, developers should apply thoughtful design and UX principles during the customization process.

Start With a Clear Use Case

Define what your avatar is meant to do. Is it guiding users through a product? Handling customer inquiries? Delivering training? This clarity helps shape the avatar’s tone, pacing, and visual presentation.

Use Branded Visuals and Voices

An avatar’s appearance and voice should reflect your brand identity. Select facial features, clothing, and background elements that complement your product or service. The same applies to the voice, whether it’s formal, friendly, or technical.

Keep Scripts Conversational and Natural

Avoid overly robotic or formal language. Write responses in a tone that feels human and relatable. Interactive avatars are most effective when they sound like a real person guiding the user through a process.

Incorporate Feedback Loops

Allow users to rate avatar responses or provide feedback. This gives you data to improve performance over time. You can also use analytics to track drop-off points or identify content gaps.

Test Across Devices and Screen Sizes

Avatars may look different depending on the device. Make sure your avatar works smoothly on desktop, tablet, and mobile. Optimize resolution, audio, and load times to avoid glitches or awkward lags.

Provide Fallback Paths

Sometimes the avatar won’t have an answer. Build in polite fallback responses and escalate to a human when needed. A well-designed avatar should know when to pass the baton.

Monitor and Iterate

The best avatars evolve over time. Use user data and A/B testing to refine appearance, responses, and behaviors. Try different knowledge sources and personalities to see what works best for your users.

D-ID’s Role in Interactive Avatar Solutions

D-ID makes it simple for developers and product teams to build real-time AI avatar interfaces that feel natural and human. Our platform was designed to integrate seamlessly into enterprise stacks while offering creative flexibility for design teams and builders.

Core Features:

- Real-Time Video Rendering: Create talking avatars from a single image in seconds with synced facial expressions and gestures.

- Multilingual Interaction: Translate content across over 100 languages and auto-sync facial movement to match each one.

- Agentic AI Integration: Pair avatars with D-ID’s AI Agents to build conversational systems that listen, understand, and respond in context.

- Cloud-Based API Access: Connect avatars directly to your application via secure, scalable APIs.

- No Design Expertise Required: Upload a photo/video and script, then let the platform do the rest. Perfect for non-designers and fast-moving teams.

D-ID’s avatars are already being used in banking, education, healthcare, and retail to make digital experiences more human. Our clients use them for customer onboarding, product demos, compliance training, and even talent acquisition.

As AI becomes more integrated into everyday tools, interactive avatars are the next step in making those tools approachable, trustworthy, and engaging.

Next Steps: Build Real-Time Avatars That Speak for Your Brand

Real-time AI avatars are transforming how we interact with digital products. They are fast, flexible, and incredibly effective at creating human-like engagement across industries.

With D-ID, you can:

- Launch fully interactive avatars in a matter of days

- Connect them to live knowledge sources and conversational agents

- Deliver multilingual content without manual re-recording

- Embed avatars into any website, app, or internal system

Ready to try it? Book an intro call or learn more about our AI Agent Frameworks. Your avatar is only a few clicks away.

FAQs

-

Interactive AI avatars are capable of responding to users in real time. They use speech recognition, language understanding, and dynamic rendering to simulate a natural conversation. Standard AI avatars are often pre-recorded or limited to one-way communication. Interactive avatars listen, process, and respond to input, allowing them to participate in real-time interactions. This makes them more versatile and effective in user-driven environments like customer service portals or e-learning platforms.

-

Real-time AI avatars create a sense of presence and responsiveness. Instead of passively watching a video, users interact with an avatar that reacts to their questions and behaviors. This boosts engagement, improves information retention, and increases trust. When avatars deliver personalized answers with natural facial expressions, users feel understood and supported. These avatars are especially useful in high-touch digital experiences, where emotional connection and clarity are key to conversion and satisfaction.

-

Yes. Interactive AI avatars are designed for easy integration into websites, mobile apps, customer portals, and enterprise systems. Most platforms use standard APIs, SDKs, or embed codes to bring avatars to life within an existing interface. D-ID’s real-time avatar solution offers flexible deployment options for developers, whether you’re building with JavaScript, React, or server-side frameworks. You can also connect avatars to databases, chatbots, or LLMs to personalize conversations even further.

-

Developers can customize avatars by adjusting visual appearance, scripting behavior, voice style, and integration points. With D-ID’s tools, you can upload a headshot, select a language and voice, and define the avatar’s personality. You can also configure how it responds to input, which knowledge sources it draws from, and what fallback paths to follow. This customization enables avatars to serve as trainers, sales reps, or support agents depending on the application.

-

D-ID provides the infrastructure and creative tools for building lifelike, interactive avatars that respond to users in real time. Our platform combines voice synthesis, video rendering, and AI agent integration to help companies launch conversational avatars quickly. We offer APIs, UI components, and localization support to ensure your avatar fits perfectly within your product or service. With D-ID, you get scalable, production-ready avatars built to engage users across markets and languages.

Was this post useful?

Thank you for your feedback!

Libi Michelson

Libi Michelson